注意

本文档适用于 Ceph 开发版本。

块设备和Nomad

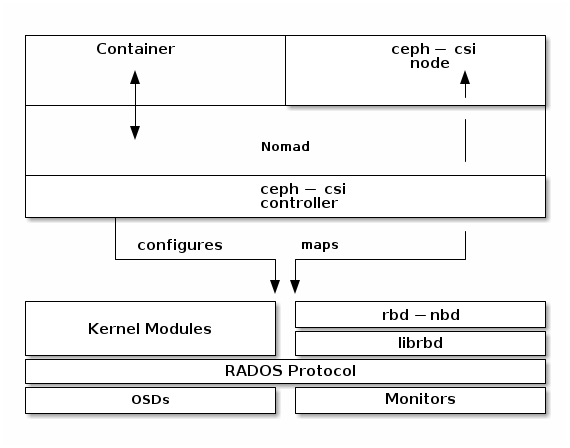

与Kubernetes一样,Nomad可以使用Ceph块设备。这是通过ceph-csi实现的,它允许您动态提供RBD镜像或导入

Nomad的每个版本都与ceph-csi兼容,但本文件中生成的程序和指南所使用的Nomad参考版本是Nomad v1.1.2,这是编写本文件时的最新版本。

要在Nomad中使用Ceph块设备,您必须在Nomad环境中安装ceph-csi。以下图表显示了Nomad/Ceph技术堆栈。

Note

Nomad有许多可能的任务驱动程序,但此示例仅使用Docker容器。

重要

ceph-csi默认情况下,使用RBD内核模块,这可能不支持所有CephCRUSH 调整参数或Ceph.

Create a Pool

默认情况下,Ceph 块设备使用rbd池。确保您的Ceph集群正在运行,然后为Nomad持久存储创建一个池:

ceph osd pool create nomad

请参阅Create a Pool关于指定池放置组数量的详细信息,请参阅放置组关于您应该为池设置多少放置组的详细信息。

新创建的池在使用前必须初始化。使用rbd工具初始化池:

rbd pool init nomad

配置 ceph-csi

Ceph客户端身份验证设置

为Nomad创建一个新用户并ceph-csi。执行以下命令并记录生成的密钥:

$ ceph auth get-or-create client.nomad mon 'profile rbd' osd 'profile rbd pool=nomad' mgr 'profile rbd pool=nomad'

[client.nomad]

key = AQAlh9Rgg2vrDxAARy25T7KHabs6iskSHpAEAQ==

配置Nomad

配置Nomad以允许容器使用特权模式

默认情况下,Nomad不允许容器使用特权模式。我们必须/etc/nomad.d/nomad.hcl:

plugin "docker" {

config {

allow_privileged = true

}

}

加载rbd模块

Nomad必须加载rbd模块。运行以下命令以确认该rbd模块已加载:

$ lsmod | grep rbd

rbd 94208 2

libceph 364544 1 rbd

如果未设置rbd如果模块未加载,请加载它:

sudo modprobe rbd

重启Nomad

重启Nomad:

sudo systemctl restart nomad

创建ceph-csi控制器和插件节点

The ceph-csi插件需要两个组件:

控制器插件: 与提供者的API通信。

节点插件: 在客户端上执行任务。

Note

我们将在这些文件中设置ceph-csi的版本。请参阅ceph-csi发布了解ceph-csi与其他版本的兼容性。

配置控制器插件

控制器插件需要Ceph集群的监控地址。收集两者(1)Ceph集群唯一fsid和(2)监控地址:

$ ceph mon dump

<...>

fsid b9127830-b0cc-4e34-aa47-9d1a2e9949a8

<...>

0: [v2:192.168.1.1:3300/0,v1:192.168.1.1:6789/0] mon.a

1: [v2:192.168.1.2:3300/0,v1:192.168.1.2:6789/0] mon.b

2: [v2:192.168.1.3:3300/0,v1:192.168.1.3:6789/0] mon.c

生成一个ceph-csi-plugin-controller.nomad类似于以下示例的文件。将fsid替换为“clusterID”,并将监控地址替换为

job "ceph-csi-plugin-controller" {

datacenters = ["dc1"]

group "controller" {

network {

port "metrics" {}

}

task "ceph-controller" {

template {

data = <<EOF

[{

"clusterID": "b9127830-b0cc-4e34-aa47-9d1a2e9949a8",

"monitors": [

"192.168.1.1",

"192.168.1.2",

"192.168.1.3"

]

}]

EOF

destination = "local/config.json"

change_mode = "restart"

}

driver = "docker"

config {

image = "quay.io/cephcsi/cephcsi:v3.3.1"

volumes = [

"./local/config.json:/etc/ceph-csi-config/config.json"

]

mounts = [

{

type = "tmpfs"

target = "/tmp/csi/keys"

readonly = false

tmpfs_options = {

size = 1000000 # size in bytes

}

}

]

args = [

"--type=rbd",

"--controllerserver=true",

"--drivername=rbd.csi.ceph.com",

"--endpoint=unix://csi/csi.sock",

"--nodeid=${node.unique.name}",

"--instanceid=${node.unique.name}-controller",

"--pidlimit=-1",

"--logtostderr=true",

"--v=5",

"--metricsport=$${NOMAD_PORT_metrics}"

]

}

resources {

cpu = 500

memory = 256

}

service {

name = "ceph-csi-controller"

port = "metrics"

tags = [ "prometheus" ]

}

csi_plugin {

id = "ceph-csi"

type = "controller"

mount_dir = "/csi"

}

}

}

}

配置插件节点

生成一个ceph-csi-plugin-nodes.nomad类似于以下示例的文件。将fsid替换为“clusterID”,并将监控地址替换为

job "ceph-csi-plugin-nodes" {

datacenters = ["dc1"]

type = "system"

group "nodes" {

network {

port "metrics" {}

}

task "ceph-node" {

driver = "docker"

template {

data = <<EOF

[{

"clusterID": "b9127830-b0cc-4e34-aa47-9d1a2e9949a8",

"monitors": [

"192.168.1.1",

"192.168.1.2",

"192.168.1.3"

]

}]

EOF

destination = "local/config.json"

change_mode = "restart"

}

config {

image = "quay.io/cephcsi/cephcsi:v3.3.1"

volumes = [

"./local/config.json:/etc/ceph-csi-config/config.json"

]

mounts = [

{

type = "tmpfs"

target = "/tmp/csi/keys"

readonly = false

tmpfs_options = {

size = 1000000 # size in bytes

}

}

]

args = [

"--type=rbd",

"--drivername=rbd.csi.ceph.com",

"--nodeserver=true",

"--endpoint=unix://csi/csi.sock",

"--nodeid=${node.unique.name}",

"--instanceid=${node.unique.name}-nodes",

"--pidlimit=-1",

"--logtostderr=true",

"--v=5",

"--metricsport=$${NOMAD_PORT_metrics}"

]

privileged = true

}

resources {

cpu = 500

memory = 256

}

service {

name = "ceph-csi-nodes"

port = "metrics"

tags = [ "prometheus" ]

}

csi_plugin {

id = "ceph-csi"

type = "node"

mount_dir = "/csi"

}

}

}

}

启动插件控制器和Nomad节点

要启动插件控制器和Nomad节点,请运行以下命令:

nomad job run ceph-csi-plugin-controller.nomad

nomad job run ceph-csi-plugin-nodes.nomad

The ceph-csi将下载镜像。

几分钟后检查插件状态:

$ nomad plugin status ceph-csi

ID = ceph-csi

Provider = rbd.csi.ceph.com

Version = 3.3.1

Controllers Healthy = 1

Controllers Expected = 1

Nodes Healthy = 1

Nodes Expected = 1

Allocations

ID Node ID Task Group Version Desired Status Created Modified

23b4db0c a61ef171 nodes 4 run running 3h26m ago 3h25m ago

fee74115 a61ef171 controller 6 run running 3h26m ago 3h25m ago

使用 Ceph 块设备

创建rbd镜像

ceph-csi需要用于与 Ceph 集群通信的 cephx 凭据。生成一个ceph-volume.hcl类似于以下示例的文件,使用新创建的nomad用户ID和cephx密钥:

id = "ceph-mysql"

name = "ceph-mysql"

type = "csi"

plugin_id = "ceph-csi"

capacity_max = "200G"

capacity_min = "100G"

capability {

access_mode = "single-node-writer"

attachment_mode = "file-system"

}

secrets {

userID = "admin"

userKey = "AQAlh9Rgg2vrDxAARy25T7KHabs6iskSHpAEAQ=="

}

parameters {

clusterID = "b9127830-b0cc-4e34-aa47-9d1a2e9949a8"

pool = "nomad"

imageFeatures = "layering"

mkfsOptions = "-t ext4"

}

在查询每个主机的ceph-volume.hcl文件已生成,创建卷:

nomad volume create ceph-volume.hcl

使用rbd镜像与容器

作为使用rbd镜像与容器的练习,修改Hashicorpnomad有状态示例。

生成一个mysql.nomad类似于以下示例的文件:

job "mysql-server" {

datacenters = ["dc1"]

type = "service"

group "mysql-server" {

count = 1

volume "ceph-mysql" {

type = "csi"

attachment_mode = "file-system"

access_mode = "single-node-writer"

read_only = false

source = "ceph-mysql"

}

network {

port "db" {

static = 3306

}

}

restart {

attempts = 10

interval = "5m"

delay = "25s"

mode = "delay"

}

task "mysql-server" {

driver = "docker"

volume_mount {

volume = "ceph-mysql"

destination = "/srv"

read_only = false

}

env {

MYSQL_ROOT_PASSWORD = "password"

}

config {

image = "hashicorp/mysql-portworx-demo:latest"

args = ["--datadir", "/srv/mysql"]

ports = ["db"]

}

resources {

cpu = 500

memory = 1024

}

service {

name = "mysql-server"

port = "db"

check {

type = "tcp"

interval = "10s"

timeout = "2s"

}

}

}

}

}

启动作业:

nomad job run mysql.nomad

检查作业状态:

$ nomad job status mysql-server

...

Status = running

...

Allocations

ID Node ID Task Group Version Desired Status Created Modified

38070da7 9ad01c63 mysql-server 0 run running 6s ago 3s ago

要检查数据是否持久,请修改数据库,清除作业,然后使用相同的文件创建它。将使用相同的RBD镜像(实际上是重复使用)。

由 Ceph 基金会带给您

Ceph 文档是一个社区资源,由非盈利的 Ceph 基金会资助和托管Ceph Foundation. 如果您想支持这一点和我们的其他工作,请考虑加入现在加入.